The standard model of the universe relies on just six numbers. Using a new approach powered by artificial intelligence, researchers at the Flatiron Institute and their colleagues extracted information hidden in the distribution of galaxies to estimate the values of five of these so-called cosmological parameters with incredible precision.

The results were a significant improvement over the values produced by previous methods. Compared to conventional techniques using the same galaxy data, the approach yielded less than half the uncertainty for the parameter describing the clumpiness of the universe’s matter. The AI-powered method also closely agreed with estimates of the cosmological parameters based on observations of other phenomena, such as the universe’s oldest light.

The researchers present their method, the Simulation-Based Inference of Galaxies (or SimBIG), in a series of recent papers, including a new study published August 21 in Nature Astronomy.

Generating tighter constraints on the parameters while using the same data will be crucial to studying everything from the composition of dark matter to the nature of the dark energy driving the universe apart, says study co-author Shirley Ho, a group leader at the Flatiron Institute’s Center for Computational Astrophysics (CCA) in New York City. That’s especially true as new surveys of the cosmos come online over the next few years, she says.

“Each of these surveys costs hundreds of millions to billions of dollars,” Ho says. “The main reason these surveys exist is because we want to understand these cosmological parameters better. So if you think about it in a very practical sense, these parameters are worth tens of millions of dollars each. You want the best analysis you can to extract as much knowledge out of these surveys as possible and push the boundaries of our understanding of the universe.”

The six cosmological parameters describe the amount of ordinary matter, dark matter and dark energy in the universe and the conditions following the Big Bang, such as the opacity of the newborn universe as it cooled and whether mass in the cosmos is spread out or in big clumps. The parameters “are essentially the ‘settings’ of the universe that determine how it operates on the largest scales,” says Liam Parker, co-author of the study and a research analyst at the CCA.

One of the most important ways cosmologists calculate the parameters is by studying the clustering of the universe’s galaxies. Previously, these analyses only looked at the large-scale distribution of galaxies.

“We haven’t been able to go down to small scales,” says ChangHoon Hahn, an associate research scholar at Princeton University and lead author of the study. “For a couple of years now, we’ve known that there’s additional information there; we just didn’t have a good way of extracting it.”

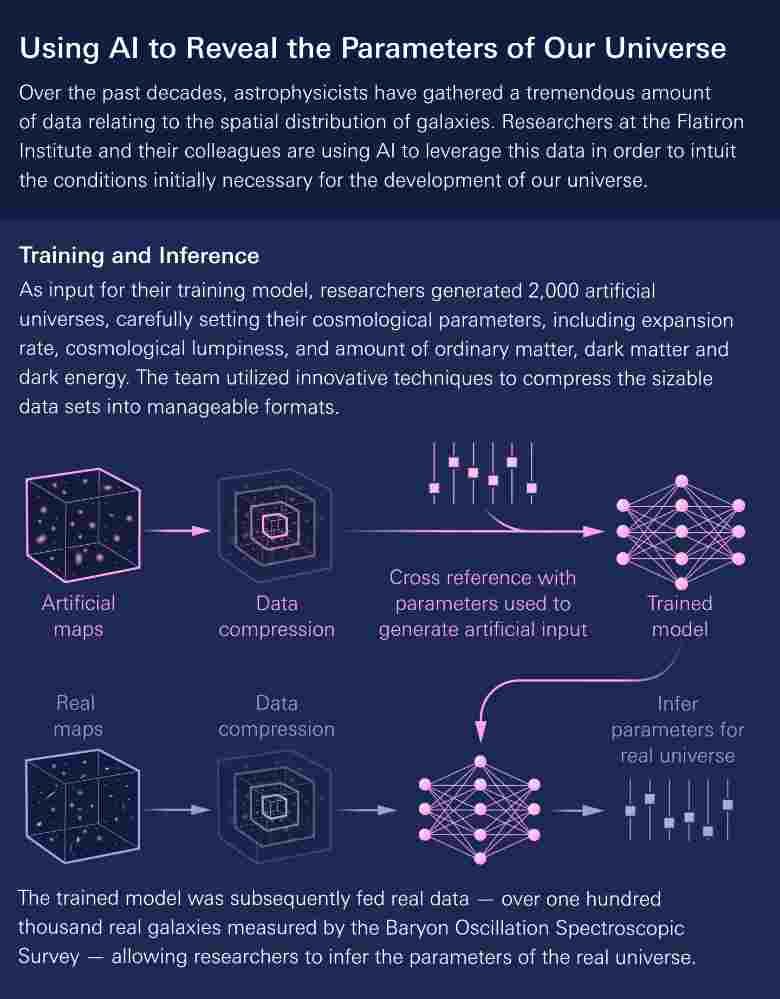

Hahn proposed a way to leverage AI to extract that small-scale information. His plan had two phases. First, he and his colleagues would train an AI model to determine the values of the cosmological parameters based on the appearance of simulated universes. Then they’d show their model actual galaxy distribution observations.

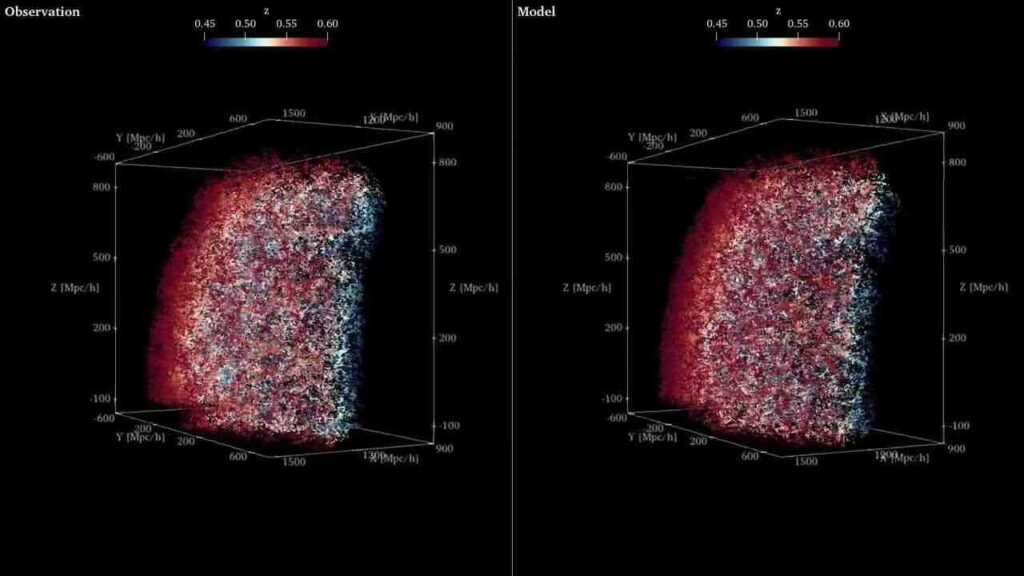

Hahn, Ho, Parker and their colleagues trained their model by showing it 2,000 box-shaped universes from the CCA-developed Quijote simulation suite, with each universe created using different values for the cosmological parameters. The researchers even made the 2,000 universes appear like data generated by galaxy surveys—including flaws from the atmosphere and the telescopes themselves—to give the model realistic practice.

“That’s a large number of simulations, but it’s a manageable amount,” Hahn says. “If you didn’t have the machine learning, you’d need hundreds of thousands.”

By ingesting the simulations, the model learned over time how the values of the cosmological parameters correlate with small-scale differences in the clustering of galaxies, such as the distance between individual pairs of galaxies. SimBIG also learned how to extract information from the bigger-picture arrangement of the universe’s galaxies by looking at three or more galaxies at a time and analyzing the shapes created between them, like long, stretched triangles or squat equilateral triangles.

With the model trained, the researchers presented it with 109,636 real galaxies measured by the Baryon Oscillation Spectroscopic Survey. As they hoped, the model leveraged small-scale and large-scale details in the data to boost the precision of its cosmological parameter estimates. Those estimates were so precise that they were equivalent to a traditional analysis using around four times as many galaxies.

That’s important, Ho says, because the universe only has so many galaxies. By getting higher precision with less data, SimBIG can push the limits of what’s possible.

One exciting application of that precision, Hahn says, will be the cosmological crisis known as the Hubble tension. The tension arises from mismatched estimates of the Hubble constant, which describes how quickly everything in the universe is spreading out.

Calculating the Hubble constant requires estimating the universe’s size using “cosmic rulers.” Estimates based on the distance to exploding stars called supernovae in distant galaxies are around 10 percent higher than those based on the spacing of fluctuations in the universe’s oldest light.

New surveys coming online in the next few years will capture more of the universe’s history. Pairing data from those surveys with SimBIG will better reveal the extent of the Hubble tension, and whether the mismatch can be resolved or if it necessitates a revised model of the universe, Hahn says. “If we measure the quantities very precisely and can firmly say that there is a tension, that could reveal new physics about dark energy and the expansion of the universe,” he says.

Hahn, Ho and Parker worked on the study alongside Michael Eickenberg of the Flatiron Institute’s Center for Computational Mathematics (CCM), Pablo Lemos of the CCA, Chirag Modi of the CCA and the CCM, Bruno Régaldo-Saint Blancard of the CCM, Simons Foundation president David Spergel, Jiamin Hou of the University of Florida, Elena Massara of the University of Waterloo, and Azadeh Moradinezhad Dizgah of the University of Geneva.

Reference: ChangHoon Hahn et al, Cosmological constraints from non-Gaussian and nonlinear galaxy clustering using the SimBIG inference framework, Nature Astronomy (2024). DOI: 10.1038/s41550-024-02344-2